思考题

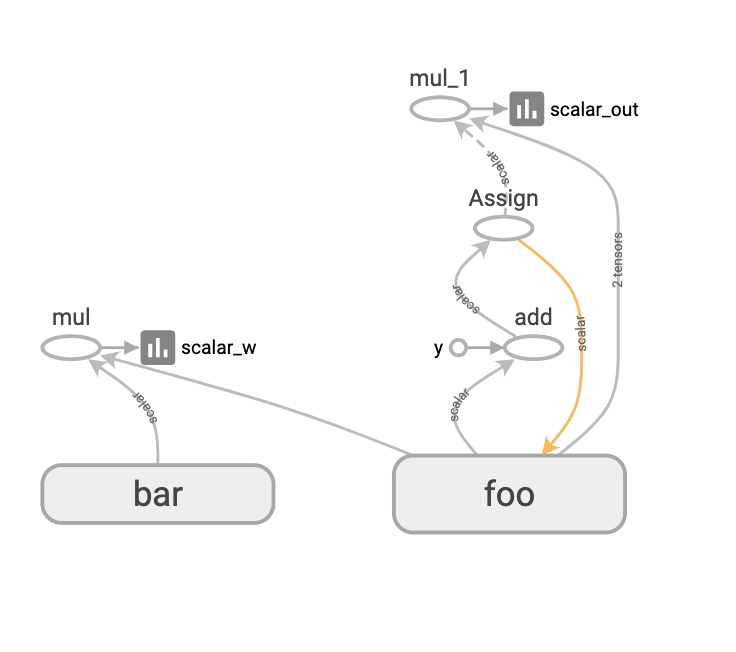

在scope例子中加入tensorboard, 并观察可视化图与没有scope的变化

1 | import tensorflow as tf |

1 | tensorboard --logdir tensorflow_excise/result |

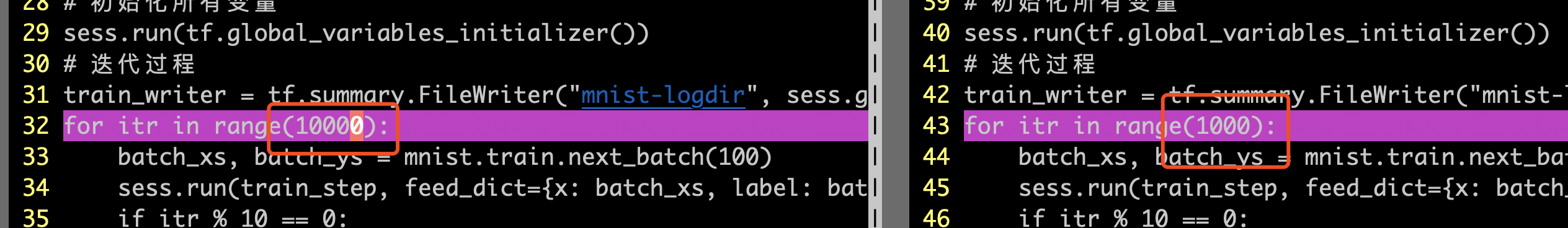

MNIST DNN中single-layer.py和single-layer-optimization.py的结果是有差距, 寻找原因

编程题

MNIST DNN例子中实现cross entropy;

1 | # by cangye@hotmail.com |

在IRIS例子中实现cross entropy代替MSE和sigmoid

1 | # by cangye@hotmail.com |